Command Runner

Installation

Prerequisites

Ubuntu Install java

sudo add-apt-repository ppa:webupd8team/java sudo apt-get update sudo apt-get install oracle-java8-installer

Downloads

- For latest released version Download

DO NOT extract the command runner into your local copy of the indeni-knowledge source: if you do, you may accidentally commit it with git.

Intro

You can use CommandRunner to test .ind scripts (both interrogation and monitoring).

Common test modes:

- parse-only – Test your script against some input data (e.g., some command output you've copied from a device) without actually connecting to a live device.

- full-command – Test your script against a live, running device.

- test create – Create a unit test for an .ind script.

- test run – Run all of the unit tests for an .ind script.

To run CommandRunner

- *nix: command-runner.sh

- Windows: command-runner.bat

Unit Testing with the Command-runner

You can use command-runner to create or run unit tests for a given .ind file.

These tests get committed to the source just like an .ind script, so you can run them any time later.

These tests also get automatically run every time you open or commit to a /wiki/spaces/IKP/pages/81794466.

You should run the unit tests for the .ind you are working on before you commit (especially before you open or commit to a pull request), to make sure you haven't broken anything.

Example of how to create a unit test:

Run the command from the directory which the ind files are located: indeni-knowledge/parsers/src/checkpoint/firewall/interface-fake-tx-hang (separated to different lines for clarity of reading):

command-runner test create ../interface-fake-tx-hang/interface-fake-tx-hang.ind.yaml 0 input.txt 2020-04-21 15:32:00,689 INFO -- Starting command runner 2020-04-21 15:32:01,134 INFO -- Preparing test case '0' for command '../interface-fake-tx-hang/interface-fake-tx-hang.ind.yaml' 2020-04-21 15:32:03,170 INFO -- Successfully prepared test case 2020-04-21 15:32:03,171 INFO -- Exiting

Parameters:

| File name | Description |

|---|---|

| indeni-knowledge/parsers/src/checkpoint/firewall/interface-fake-tx-hang/interface-fake-tx-hang.ind.yaml | The script we're creating the test for |

| 0 | The name of the test |

| input.txt | The path to an input file which contains input test data for the test |

Guidelines for Creating Unit Tests

- Test name can be anything - choose descriptive name

- Input file: a file that is being created manually and contains the output data from a command on a device that your script would execute.

e.g, the interface-fake-tx-hang.ind.yaml script runs some grep command command against Check Point firewalls, so the input file for the test would contain the output from running the same grep command on a Check Point device.

The command above will create all the files under the corresponding test folder. See here for example. - You need to run the command including the 2 dots before the forward slash (../), otherwise the command may fail.

- The device from which you got you test input has an OS or model version. Add that version number to the end of your test case name. E.g., if the test input data was gathered from a Check Point gateway running GAIA OS version R80.20, your test name would be "my_test_which_tests_this_thing_R8020". Use all capitals. No dots, hyphens, or underscores, etc.

This is not mandatory, if you do not have too many cases to test, use the name 0. - Your tests should cover every possible path through the code.

- Each test should test a different path in the code.

- After your script has been running in production, if someone finds a bug in it, before you fix the bug, it is good practice to first create a test case which reproduces the bug: then, when the test passes, you know for sure that the bug is fixed.

File types

- input.json

This file is only used in /wiki/spaces/IKP/pages/75890692. Otherwise you can ignore this file. (It just holds a pointer to the 'input_0_0' file). - input_0_0

This file holds the input data supplied when you created the test – it's an exact copy of that file. The name is arbitrary, but don't change it or it will break the test. - output.json

This is the output of the test run. It contains the metric information of the IND script, in JSON format.

output.json:

...

{

"type": "ts", # Type of the data. 'ts' is for time series.

"tags": { # I.e., meta-data for this value

"im.name": "memory-total-kbytes", # Indeni Metric Name

"im.dstype": "gauge", # Indeni Metric Data Storage Type

"im.step": 300, # Monitoring interval (from script META) in seconds

},

"value": 1019996.0, # The actual value of the metric

"timestamp": 1511229564483

}

...

This json just represents the test output for a single metric, 'memory-total-kbytes'. Most output.json files contain many metrics and their associated data.

Once you've created a test, be sure to validate the output.json; make sure it has all the data you expect.

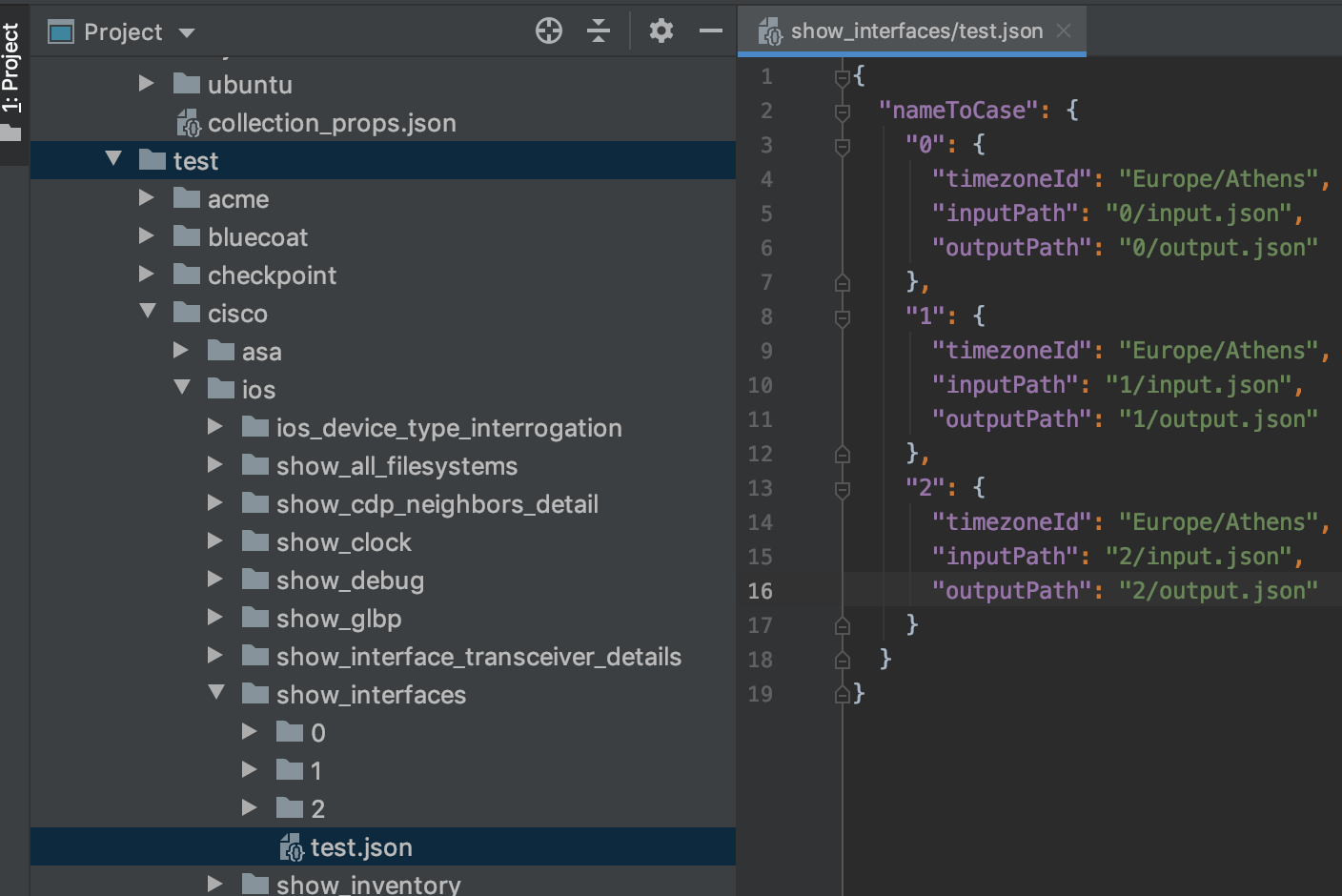

test.json

There is a test.json file in the tests folder for every .ind under indeni-knowledge/test

This is a test manifest. It lists all the test cases in the same folder.

command-runner runs the tests that are listed within test.json

When a new test is created, the command-runner adds an entry for your test into the test.json.

If you rename or delete your test, you'll have to manually update test.json to reflect the changes.

Running Tests

Here's an example of how to run a test:

/projects/indeni/indeni-knowledge/parsers/src/checkpoint/clish $ command-runner test run clish-config-unsaved.ind 2018-10-17 12:58:38,245 INFO -- Starting command runner 2018-10-17 12:58:39,232 INFO -- Running test for command 'clish-config-unsaved.ind' 2018-10-17 12:58:42,325 INFO -- Running test case 'could-not-acquire-the-config-lock' 2018-10-17 12:58:42,469 INFO -- Running test case 'unable-to-get-user-permission' 2018-10-17 12:58:42,495 INFO -- Running test case 'user-denied-access' 2018-10-17 12:58:42,540 INFO -- Running test case 'failed-to-build-acl' 2018-10-17 12:58:42,583 INFO -- Test of command 'clish-config-unsaved.ind' has been completed successfully 2018-10-17 12:58:42,583 INFO -- Exiting

Example notes:

- command-runner ran 5 tests

- All tests completed successfully.

- The actual test cases are residing in a different directory under /indeni-knowledge/parsers/test/checkpoint/clish/clish-config-unsaved/

- /indeni-knowledge/parsers/test/checkpoint/clish/clish-config-unsaved/clish-config-unsaved

- /indeni-knowledge/parsers/test/checkpoint/clish/clish-config-unsaved/could-not-acquire-the-config-lock

- /indeni-knowledge/parsers/test/checkpoint/clish/clish-config-unsaved/unable-to-get-user-permission

- /indeni-knowledge/parsers/test/checkpoint/clish/clish-config-unsaved/user-denied-access

- /indeni-knowledge/parsers/test/checkpoint/clish/clish-config-unsaved/failed-to-build-acl

- It is also possible to run a single one of the script's test cases:

command-runner test run clish-config-unsaved.ind -c unable-to-get-user-permission

Test Failure

In this next example, the test case 'failed-to-build-acl' fails since the expected test result is different than the actual result:

2017-10-31 19:33:35,635 INFO -- Starting command runner

2017-10-31 19:33:35,644 INFO -- Running test for command 'C:\indeni-knowledge\parsers\src\checkpoint\clish\clish-config-unsaved.ind'

2018-10-17 12:58:42,325 INFO -- Running test case 'could-not-acquire-the-config-lock'

2018-10-17 12:58:42,469 INFO -- Running test case 'unable-to-get-user-permission'

2018-10-17 12:58:42,495 INFO -- Running test case 'user-denied-access'

2018-10-17 12:58:42,540 INFO -- Running test case 'failed-to-build-acl'

2017-10-31 19:33:39,587 ERROR -- Critical failure running command runner

java.lang.AssertionError: Result doesn't have the same metrics as expected. Expected: Set(DoubleMetric(Map(im.dstype -> gauge, im.dstype.displaytype -> boolean,im.name-> config-unsaved, live-config -> true, display-name -> Configuration Unsaved?),1.0,0)), but got: Set(DoubleMetric(Map(im.dstype -> gauge, im.dstype.displaytype -> boolean,im.name-> config-unsaved, live-config -> true, display-name -> Configuration Unsaved?),0.0,0))

at indeni.collector.commandrunner.testing.CommandParsingTester.indeni$collector$commandrunner$testing$CommandParsingTester$$assertResult(CommandParsingTester.scala:170)

at indeni.collector.commandrunner.testing.CommandParsingTester$$anonfun$runTest$2$$anonfun$apply$1.apply$mcV$sp(CommandParsingTester.scala:100)

....

Here, we see that an AssertionError has occurred; it tells us that we got a different result than expected, and then proceeds with describing what was expected (Expected:) and what was actually produced by the parser (but got:).

To better understand the difference, copy/paste into a text editor, and compare the results (or, if the results are very big, you could use a diff tool).

Of course, the test might fail if the because of a syntax error or some other reason:

2017-10-31 19:37:26,789 INFO -- Starting command runner 2017-10-31 19:37:26,795 INFO -- Running test for command 'C:\indeni-knowledge\parsers\src\checkpoint\clish\clish-config-unsaved.ind' 2018-10-17 12:58:42,325 INFO -- Running test case 'could-not-acquire-the-config-lock' 2018-10-17 12:58:42,469 INFO -- Running test case 'unable-to-get-user-permission' 2017-10-31 19:37:30,666 ERROR -- failed to parse results of command: chkp-clish-config-unsaved, Failure(indeni.collector.ParsingFailure: Header = Parse Error, Description = Command [] chkp-clish-config-unsaved parser failed with input: unsaved 1505144920 installer:last_sent_da_info 1505144921, Message = Header = Execution Error, Description = Failed to execute AWK code, Message = For input string: "0,", , ) 2017-10-31 19:37:30,669 ERROR -- Critical failure running command runner java.lang.AssertionError: Parsing failed at indeni.collector.commandrunner.testing.CommandParsingTester$$anonfun$runTest$2$$anonfun$apply$1.apply$mcV$sp(CommandParsingTester.scala:97)

These are the same type of errors that you may encounter when running the command-runner with either the parse-only or the full-command actions.

Examples

- Test a single collection script you wrote against a saved output of the command (unit test):

bash command-runner.sh parse-only mpstat.ind -f mpstat.input

- Test a single collection script against a live device, using SSH credentials. When testing a single collection script, the "requires" portion of the META is ignored:

bash command-runner.sh full-command --ssh someuser,somepass vsx-stat.ind 10.3.3.72

Test using SNMPV2c protocol:

bash command-runner.sh full-command --snmp2 <community> vsx-stat.ind 10.3.3.72

Test using SNMPV3 protocol:

bash command-runner.sh full-command --snmp3 <security-level>,<security-name>,<auth-protocol>,<auth-passphrase>,<privacy-protocol>,<privacy-passphrase> vsx-stat.ind 10.3.3.72

Security level options: [NOAUTH_NOPRIV | AUTH_NOPRIV | AUTH_PRIV]

Authentication protocol options: [MD5 | SHA]

Privacy protocol options: [DES | 3DES | AES128 | AES192 | AES256]

- Test a group of scripts by providing the folder they are in. Interrogation scripts are run first, according to the normal operation of the Collector.

bash command-runner.sh full-command --ssh someuser,somepass parsers 10.3.3.72

- Test a group of scripts with the API key:

bash command-runner.sh full-command --api-key SOMEKEY parsers 10.3.3.72

Notes: 1. You can add "–verbose" if you'd like to see debug information (using the debug() function in your script).

2. If you run into this error message "No such variable 'api-key' in '/api?type=op&cmd=<show><ntp></ntp></show>&key=${api-key}' " in the logs, replace {api-key} with {credentials.api-key}